After using PowerShell for about a year, I've actually come to love it. I'm not saying it's perfect, and I'm not saying it's the best it could be. PowerShell is not as powerful as Perl and it's not as native as BASH, but what it does very well is .Net.

When Microsoft created the .Net framework, they created a fantastically powerful platform that could be easily extended and shaped to fit any need. Like any good Object Oriented programming framework, there was a strong set of basic objects built in that allowed both Microsoft and third parties to build very robust solutions quickly and easily. Nearly all MS products now have .Net assemblies, which add capabilities into the framework for the product being used. This is true not only of products like Office and Visio, but of back-end products like Exchange, PowerPoint, Windows itself, and Active Directory! And PowerShell can access it all.

So, what's the best way to get started with PowerShell? I found that learning the PowerShell language was similar to learning any programming language- have an itch to scratch. What I mean is everyone can write the "Hello World" app. Even people with no programming experience at all can do it, especially in PowerShell. But to really get down to it and learn the ins and outs of a language, you need to be applying it to an actual problem you have.

So, let's see an example. What if you were in charge of a group of servers and you needed to check some statistics from them all. Well, we know that WMI already contains that data and more. And wouldn't you know it, one of the Commandlets in PowerShell gets us an Object containing WMI data! So, let's take a quick look:

$wmidata = get-wmiobject win32_computersystem

That's it! We now have an object that contains the WMI data from win32_computersystem. Sure, you can do this in VBScript so far, but it's not nearly as simple! And we're about to demolish what VBScript can do!

$wmidata | Get-Member

TypeName: System.Management.ManagementObject#root\cimv2\Win32_ComputerSystem

Ok, so we see that this object is actually a .Net framework data type from the System.Management... assembly. We also see that there's a commandlet called Get-Member in PowerShell, which returns all of the methods, properties, and ScriptMethods an object exposes! At this point, Doc Brown will say "Great Scott"!

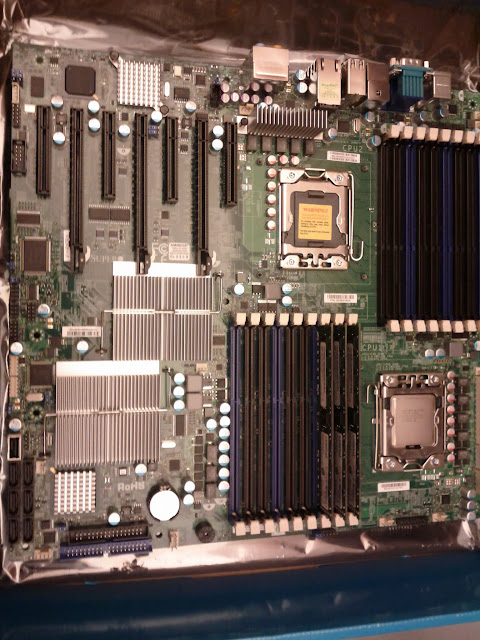

Alright, we want to actually do something here, so let's get to it. We want to collect system information for several computers on our network. Because we're admins, and we love being informed...right?

$wmidata = get-wmiobject -computername somecomputer win32_computersystem

"Machine name: " +$wmidata.Name

"Model: " + $wmidata.Model

"Manufacturer: " + $wmidata.Manufacturer

"Logged on user: " +$wmidata.UserName

"Total RAM: " +$wmidata.TotalPhysicalMemory

Our output:

Machine name: somecomputer

Model: OptiPlex 755

Manufacturer: Dell Inc.

Logged in user: MYDOMAIN\auser

Total RAM: 4158242816

Ok, so far this has saved me about 20 minutes of VBScript and it's got me all this data! And here's a neat trick...

"Total RAM: " + $wmidata.TotalPhysicalMemory / 1GB

Yes, you can abbreviate all that conversion math to go between KB, MB, and GB by using those letters.

So, we have a script that can connect to a remote computer (assuming you have permission) and gather WMI data. It then displays that data onto the screen for you. And it took us, what, 2 minutes of typing to get this? Using your imagination, you can probably see that you could wrap this all in a for loop and rip through a list in a matter of minutes. And the fun doesn't stop there!

If you're interested in learning PowerShell, I suggest you take a look at the Microsoft PowerShell site here . It's open to the public, and it's helpful. But you may find that Microsoft's information is a bit terse. Once you get your head around PowerShell, you'll actuall find this kind of information the most helpful. Also, check out the .Net framework documentation...this all applies to PowerShell objects! So, find an itch to scratch and get coding!

Sep 22, 2011 at 15:45

Sep 22, 2011 at 15:45  Tom Cameron | Comments Off |

Tom Cameron | Comments Off |